Ajabny Project: Building an AI-Powered Content Moderation App

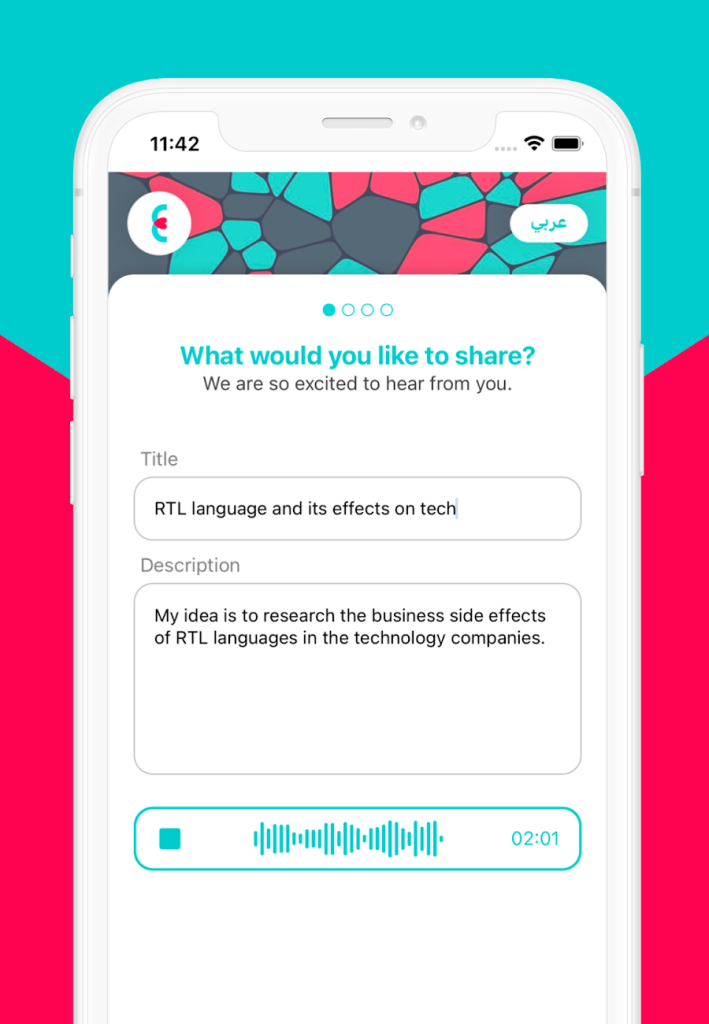

Aram, a production company based in Saudi, partnered with aiXplain to develop a custom app named Ajabni for a television show that would allow viewers to contribute ideas in text, audio, or video format. However, to ensure that the submitted content was appropriate, an additional content moderation system was needed. The goal was to use machine learning algorithms to automatically detect and remove inappropriate content, such as hate speech, nudity, and violence.

Process

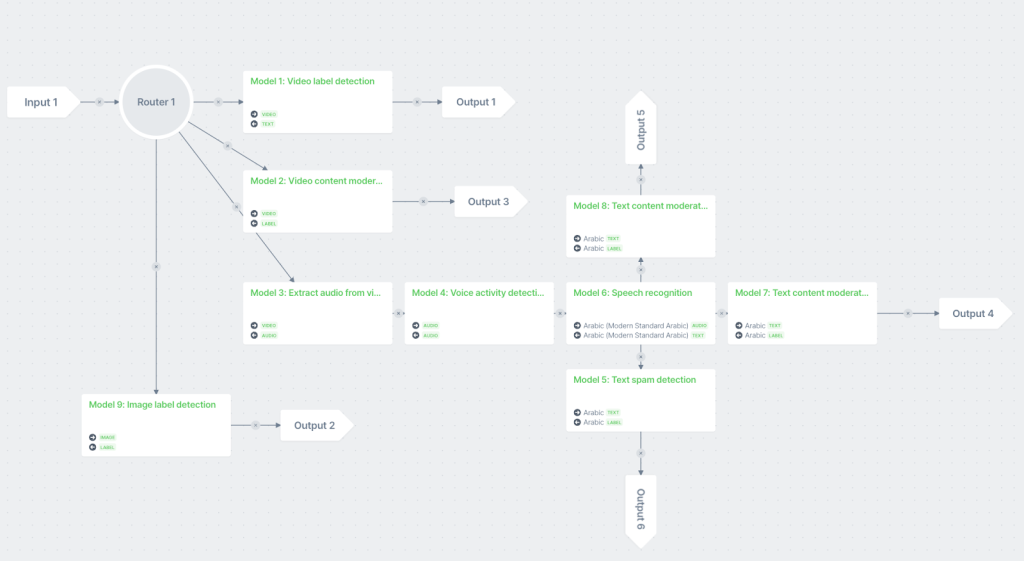

To address this challenge, the aiXplain team analyzed Aram’s existing content and identified common themes and patterns that could be used to train the machine learning models. They developed a pipeline that utilized computer vision and natural language processing techniques to analyze images, videos, and text content in real-time.

The pipeline consisted of several steps, including image and video analysis for detecting explicit content, object recognition for identifying specific objects, and text analysis for identifying hate speech and offensive language. The pipeline also included a script that compared the confidence scores of each label or detection to a predetermined threshold and marked content as spam if it exceeded the threshold.

The results of each model were sent to a customized script that prepared the output. The output consisted of a list of labels and their corresponding confidence scores for each image, video, and text submission. The labels corresponded to different categories of content, such as nudity, violence, and hate speech. For images and videos, the output also included empty lists, which indicated that no explicit content was detected in the submission. Additionally, any submissions that exceeded the predetermined threshold for confidence score were marked as spam.

Overall, the pipeline’s output provided a comprehensive analysis of each user submission, allowing for quick and accurate identification of inappropriate content.

Additional Information

After filtering out inappropriate content, the ideas go through a separate model for ranking, which was done by Kareem, the Head of Human AI Interaction. The content moderation system developed by aiXplain has been highly effective in improving the user experience on Aram’s platform/app. Since its implementation, the system has successfully detected and removed thousands of pieces of inappropriate content while minimizing false positives and maintaining the platform’s performance.

Conclusion

In conclusion, the partnership between Aram and aiXplain resulted in the development of a highly effective AI-powered content moderation system that significantly improved the user experience on Aram’s platform. By using machine learning algorithms to automatically detect and remove inappropriate content, such as hate speech, nudity, and violence, the system has helped Aram maintain a safe and respectful environment for its users.

The content moderation system developed by aiXplain demonstrated a high level of accuracy, successfully detecting and removing thousands of pieces of inappropriate content while minimizing false positives. The system’s ability to analyze images, videos, and text content in real-time allowed for quick moderation of user submissions.

The success of this project highlights the potential of AI-powered content moderation systems to enhance user experience and maintain a safe and respectful online environment. As AI technology continues to evolve, we can expect to see more companies leveraging its power to improve their platforms and better serve their users.

We have cookies!

We have cookies!