How to Automate Unit Tests and Documentation with AI Agents

An aiXplain agent that can write unit tests for your Python code, and also write a README for GitHub repo.

Software testing can often feel like a repetitive, time-consuming task, especially when faced with the manual work of writing comprehensive unit tests for every piece of code. What if you could delegate this job to an agent that not only writes these tests for you but also generates the README documentation, ensuring your GitHub repository is clean, informative, and easy to navigate? At aiXplain, my team and I built an automated agent designed to streamline software testing and documentation for Python projects.

In this blog, we’ll dive into how we developed a multi-agent system that automates unit test generation for Python code and README generation. You’ll learn about the technical challenges we encountered, the tools and methods we used, and the impact of an automated testing assistant on developer workflows. If you’re interested in automation, developer tools, or simply easing the pains of testing and documentation, read on to discover how you, too, could bring this innovation into your projects.

Technical Details

- Programming language: Python

- LLM: GPT-4o

- Agent framework: aiXplain

- Agent code: Check this Google Colab

Unit Tests

The goal of writing unit tests for your software code is to ensure it has been tested against all cases before production. Writing these tests manually can be a time-intensive process. We built an agent that takes an input directory with relevant code files (Python code in this case) and generates unit tests to verify if your code passes all test cases. Below, we’ll walk you through how we built this agent.

Import libraries

First, install the essential libraries for building an agent using the aiXplain framework:

import os

os.environ["TEAM_API_KEY"] = "TEAM_API_KEY_HERE"

from aixplain.factories.agent_factory import AgentFactory

from aixplain.factories.team_agent_factory import TeamAgentFactoryNext, create a script to read your code files from the input directory:

import os

def get_files_content(code_directory):

python_files = []

for r, d, f in os.walk(code_directory):

for file in f:

if file.endswith(".py"):

python_files.append(os.path.join(r, file))

output = []

for file in python_files:

with open(file, "r", encoding="utf8") as f:

content = f.read()

output.append((file, content))

return outputThis method receives the code directory and outputs a list of files.

Create the test and documentation agents

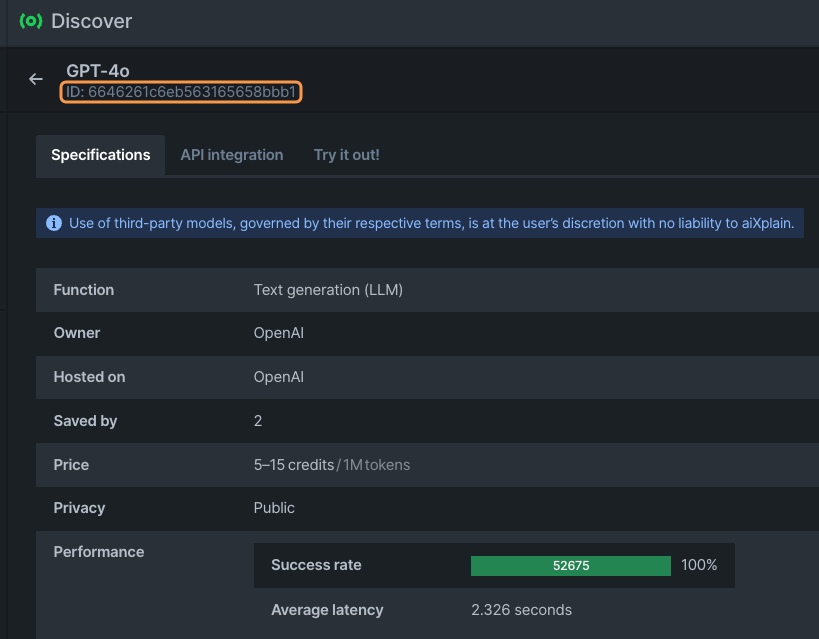

Building agents with aiXplain’s platform requires just a few lines of code. We selected GPT-4o for its strong text generation and performance capabilities. To build agents, select the LLM for your use case from the 80+ LLMs we have available.

Selecting the large language model for your agent

The first step is to get the LLM ID, and you can get it from Discover, aiXplain marketplace, as shown below. Please note that you will gain access to the marketplace after signing up.

Build the agent

The following lines of code is how we started to build our agent:

# gpt 4o

llm_id = "6646261c6eb563165658bbb1"

# create test agent

description = "You are an AI with advanced code understanding and planning capabilities. You are specialized in generating Python unit tests using pytest package. Search on the web for any information about the code that is missing. Also, you MUST mock any dependencies so it's not calling external dependencies."

test_agent = AgentFactory.create(name="TestAgent", description=description, llm_id=llm_id)

# create document agent

description = "You are an AI with advanced documentation skills. You are specialized in generating markdown documentation given all the code repository. Search on the web for any package to get more information about it."

doc_agent = AgentFactory.create(name="DocumentAgent", description=description, llm_id=llm_id)The line that demonstrates how to create an agent is part of the main AgentFactory library, which is aiXplain’s agentic platform. Now, since we build multi-agents, we need a team agent that brings the test agent and document agent together. Similar to how we created the agents above, the team agent will look as follows:

# team agent

team_agent = TeamAgentFactory.create(name="DocumentTeamAgent", agents=[test_agent, doc_agent], llm_id=llm_id)Please note that in the code snippet above, we passed a list of agents we want to form a team for and the same llm_id throughout.

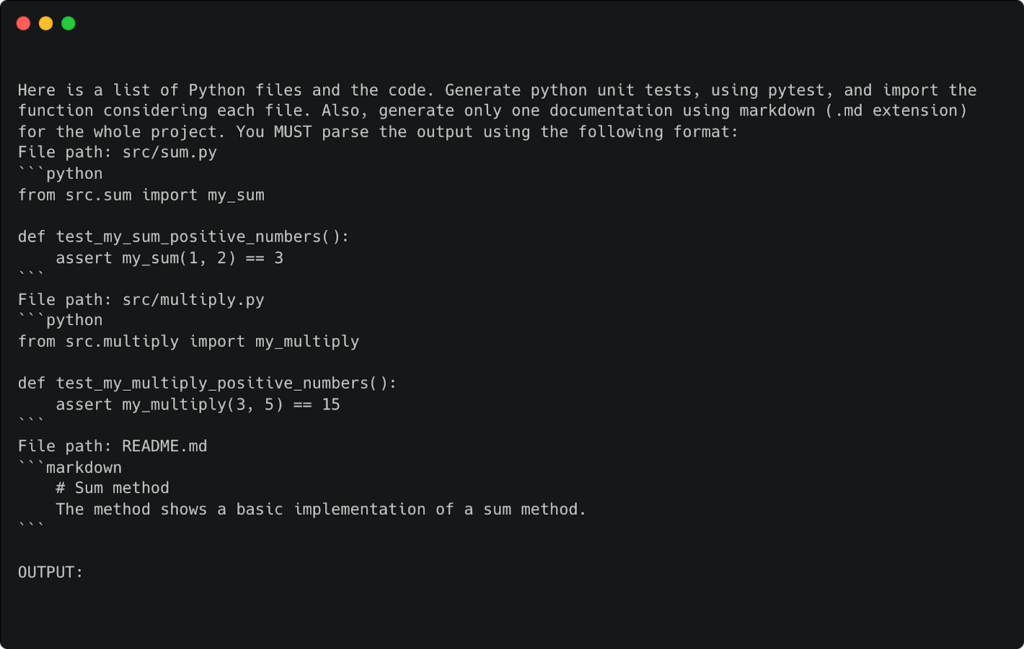

Prompt engineering

Next, write the prompt to define your agent’s behavior. We created a separate prompt.txt file and passed it as follows:

# get file content

file_content = get_files_content("src/")

if not file_content:

print("Folder must contain .py files")

return

with open("prompt.txt", "r") as f:

prompt = f.read().strip()

for file_path, content in file_content:

prompt += f"\nFile path: {file_path}\nContent: {content}"

prompt += "OUTPUT:\n"We structured the prompt in a way that the agent understands what the input and output should be like:

Then, in one line, you can run the team agent:

# run team agent

response = team_agent.run(prompt)Parsing the output

Finally, parse the output correctly and save it in the desired folder:

# parse the response

if not os.path.exists("tests"):

os.mkdir("tests")

with open(f"tests/test.md", "w") as f:

f.write(response["data"]["output"])Output

Once the team of agents ran, our agent produced the following outputs:

Unit test example

Code snippet illustrating unit tests generated by the agent.

# 1. File path: src/utils/config.py

import pytest

import os

from unittest.mock import patch

# Mocking environment variables

@patch.dict(os.environ, {

"BACKEND_URL": "https://mock-backend-url.com",

"MODELS_RUN_URL": "https://mock-models-run-url.com",

"TEAM_API_KEY": "mock_team_api_key",

"AIXPLAIN_API_KEY": "mock_aixplain_api_key",

"PIPELINE_API_KEY": "mock_pipeline_api_key",

"MODEL_API_KEY": "mock_model_api_key",

"LOG_LEVEL": "DEBUG",

"HF_TOKEN": "mock_hf_token"

})

def test_config_values():

import src.utils.config as config

assert config.BACKEND_URL == "https://mock-backend-url.com"

assert config.MODELS_RUN_URL == "https://mock-models-run-url.com"

assert config.TEAM_API_KEY == "mock_team_api_key"

assert config.AIXPLAIN_API_KEY == "mock_aixplain_api_key"

assert config.PIPELINE_API_KEY == "mock_pipeline_api_key"

assert config.MODEL_API_KEY == "mock_model_api_key"

assert config.LOG_LEVEL == "DEBUG"

assert config.HF_TOKEN == "mock_hf_token"import pytest

from unittest.mock import patch, Mock

from requests.models import Response

from src.utils.request_utils import _request_with_retry

@patch('src.utils.request_utils.requests.Session')

def test_request_with_retry(mock_session):

# Create a mock response object

mock_response = Mock(spec=Response)

mock_response.status_code = 200

# Configure the mock session to return the mock response

mock_session_instance = mock_session.return_value

mock_session_instance.request.return_value = mock_response# Call the function

response = _request_with_retry('GET', 'https://example.com')

# Assert that the request was made with the correct parameters

mock_session_instance.request.assert_called_once_with(method='GET', url='https://example.com')

# Assert that the response is as expected

assert response == mock_responseimport pytest

from unittest.mock import patch, mock_open, MagicMock

from src.utils.file_utils import save_file, download_data, upload_data, s3_to_csv

@patch('src.utils.file_utils._request_with_retry')

@patch('builtins.open', new_callable=mock_open)

@patch('os.getcwd', return_value='/mocked/path')

@patch('os.path.basename', return_value='mocked_file.csv')

@patch('src.utils.file_utils.config')

def test_save_file(mock_config, mock_basename, mock_getcwd, mock_open, mock_request):

mock_response = MagicMock()

mock_response.content = b'test content'

mock_request.return_value = mock_response

download_url = 'http://example.com/file.csv'

download_file_path = save_file(download_url)

mock_request.assert_called_once_with('get', download_url)

mock_open.assert_called_once_with('/mocked/path/aiXplain/mocked_file.csv', 'wb')

assert download_file_path == '/mocked/path/aiXplain/mocked_file.csv'

@patch('requests.get')

@patch('builtins.open', new_callable=mock_open)

def test_download_data(mock_open, mock_get):

mock_response = MagicMock()

mock_response.iter_content = lambda chunk_size: [b'test content']

mock_response.raise_for_status = lambda: None

mock_get.return_value = mock_response

url_link = 'http://example.com/file.csv'

local_filename = download_data(url_link)

mock_get.assert_called_once_with(url_link, stream=True)

mock_open.assert_called_once_with('file.csv', 'wb')

assert local_filename == 'file.csv'

@patch('src.utils.file_utils._request_with_retry')

@patch('builtins.open', new_callable=mock_open, read_data=b'test content')

@patch('os.path.basename', return_value='mocked_file.csv')

@patch('src.utils.file_utils.config')

def test_upload_data(mock_config, mock_basename, mock_open, mock_request):

mock_response = MagicMock()

mock_response.json.return_value = {

'key': 'mocked_key',

'uploadUrl': 'http://example.com/upload'

}

mock_request.return_value = mock_response

mock_config.BACKEND_URL = 'http://backend.example.com'

mock_config.AIXPLAIN_API_KEY = 'mocked_api_key'

file_name = 'mocked_file.csv'

s3_link = upload_data(file_name)

mock_request.assert_any_call('post', 'http://backend.example.com/sdk/file/upload/temp-url', headers={'x-aixplain-key': 'mocked_api_key'}, data={'contentType': 'text/csv', 'originalName': 'mocked_file.csv'})

mock_request.assert_any_call('put', 'http://example.com/upload', headers={'Content-Type': 'text/csv'}, data=b'test content')

assert s3_link == 's3://example.com/mocked_key'

@patch('boto3.client')

@patch('os.getenv', side_effect=lambda key: 'mocked_value')

def test_s3_to_csv(mock_getenv, mock_boto3_client):

mock_s3 = MagicMock()

mock_boto3_client.return_value = mock_s3

mock_s3.list_objects_v2.return_value = {'Contents': [{'Key': 'mocked_file.csv'}]}

s3_url = 's3://mocked-bucket/mocked_file.csv'

aws_credentials = {'AWS_ACCESS_KEY_ID': 'mocked_id', 'AWS_SECRET_ACCESS_KEY': 'mocked_secret'}

csv_path = s3_to_csv(s3_url, aws_credentials)

mock_boto3_client.assert_called_once_with('s3', aws_access_key_id='mocked_id', aws_secret_access_key='mocked_secret')

mock_s3.list_objects_v2.assert_called_once_with(Bucket='mocked-bucket', Prefix='mocked_file.csv')

assert csv_path == 'mocked_file.csv'Output from the document agent

# Project Documentation

## Overview

This project is designed to handle various utility functions related to configuration, HTTP requests, and file operations, particularly focusing on interactions with web services and AWS S3. Below is a summary of each file's purpose and functionality within the project.

### File: `src/utils/config.py`

This file is responsible for managing configuration settings for the project. It utilizes the `os` module to fetch environment variables, which include URLs and API keys necessary for the application's operation. This setup allows for flexible configuration management, adapting to different environments by simply changing environment variables.

### File: `src/utils/request_utils.py`

This file provides a utility function named `_request_with_retry`. This function is a wrapper around the `requests` library, designed to perform HTTP requests with built-in retry logic. It uses a session configured with retry settings to handle transient errors, ensuring more robust and reliable HTTP communication.

### File: `src/utils/file_utils.py`

This file contains several utility functions aimed at facilitating file operations. Key functionalities include:

- Downloading files from a specified URL.

- Uploading data to AWS S3 using pre-signed URLs, which allows secure and temporary access to S3 resources.

- Converting S3 URLs to CSV files, enabling easy data manipulation and analysis.

These utilities handle various aspects of file management, including file paths, HTTP requests, and interactions with AWS S3, making it easier to integrate file operations into the project workflow.Conclusion

This project demonstrated how aiXplain’s platform can be leveraged to automate repetitive tasks like unit tests and README generation, which are crucial but often time-consuming. Throughout the process, we faced some technical challenges, especially around prompt engineering, fine-tuning the agents’ responses, and parsing the output to ensure accuracy. Moving forward, we aim to improve the agents to handle larger directories seamlessly and generate separate test and documentation files, making them even more adaptable.

Our next step is to build a release agent to assist with aiXplain’s SDK versioning process. This agent will review code changes, analyze functional test results, and suggest improvements to the team directly through Slack, streamlining the release workflow. With these types of agents, we hope to continue exploring how automation can make developers’ lives easier and allow us to focus more on building quality software.

Ready to automate your workflow?

Build your first AI agent today with aiXplain SDK

For support and questions, join our Discord community.

We have cookies!

We have cookies!