aiXplain On-Edge: Hybrid Deployment for Enterprise AI Without Vendor Lock-in

As enterprises race to adopt generative AI and large language models (LLMs), they face a core challenge: how to innovate with cutting-edge AI while ensuring data privacy, compliance, and control. For organizations in finance, government, and other regulated industries, this challenge is not theoretical—it defines their deployment strategy and is driving the shift toward hybrid AI deployment as a practical, secure solution.

aiXplain On-Edge offers a modern solution: a hybrid AI deployment solution that enables organizations to keep all data and traffic on-premises or in a private cloud, while still accessing aiXplain’s cloud-based AI marketplace during development and experimentation. This solution accelerates iteration, reduces vendor lock-in, and ensures that enterprises can prototype with the latest AI models before committing to full on-premises deployment.

Why Hybrid AI Deployment Matters

The AI landscape is evolving rapidly, with new large language models (LLMs), agent frameworks, and multimodal tools emerging almost monthly. As adoption accelerates—42% of large enterprises have already implemented AI—security and compliance remain top concerns for most decision-makers (ibm.com). At the same time, performance and cost are becoming equally critical. Many organizations are realizing that cloud alone may not meet the demands of real-time workloads, budget predictability, or infrastructure control.

Enterprises want to explore and adopt cutting-edge AI technologies, but they must do so while navigating complex regulations like GDPR, HIPAA, and national data residency laws. A hybrid deployment approach like aiXplain On-Edge strikes the right balance: enabling innovation, while meeting operational and regulatory requirements.

Key benefits of hybrid AI deployment with aiXplain On-Edge

- Security and compliance: Sensitive data never leaves your environment, ensuring adherence to internal and external standards.

- Access to innovation: Teams can experiment freely with over 38,000 AI assets and 180+ LLMs from aiXplain’s cloud marketplace.

- Cost control: Run production workloads on-premises for greater predictability, using cloud resources only when needed.

- Performance: Real-time inference is faster and more reliable when executed locally.

- Vendor independence: Avoid lock-in by switching models or providers without rearchitecting your stack.

Enterprises are doubling down on hybrid strategies to gain flexibility, control, and cost-efficiency. Rising infrastructure demands, unmet cloud capacity, and high operational costs are prompting a shift—particularly for legacy and sensitive workloads. Rather than abandoning the cloud, organizations are aligning workloads with their unique requirements, making hybrid the new default for modern AI deployment, according to the Uptime Institute report.

How aiXplain On-Edge Works

To understand how these benefits are achieved, let’s look at how aiXplain On-Edge works in practice.

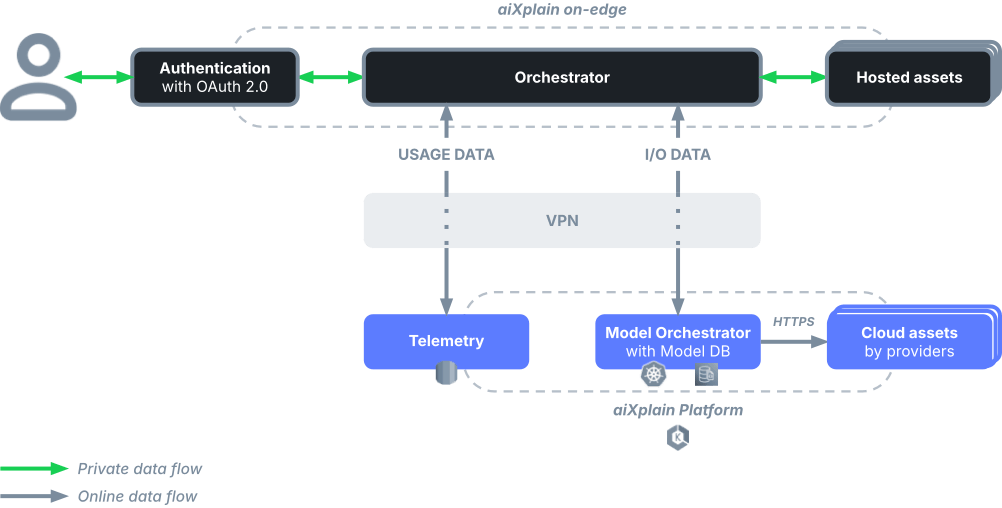

aiXplain On-Edge enables enterprises to host aiXplain’s full agentic AI stack (selected models, orchestration and runtime infrastructure for AI agents) on-premises or in a private cloud, with no data ever leaving the environment. Meanwhile, users can still access aiXplain’s cloud services during early development to evaluate new models and prototype agents.

Once a model or agent proves its value in the cloud, it can be ported on-premises with minimal effort. This develop in the cloud, deploy on-premises workflow reduces risk and boosts agility. Enterprises retain full control of their infrastructure while benefiting from aiXplain’s integrated marketplace and tooling.

Accelerating Innovation

Hybrid architectures also shorten development cycles. By decoupling development (in the cloud) from production deployment (on-premises), teams can rapidly iterate on models. Our customer data shows that using the AI marketplace and cloud resources can reduce onboarding and testing of a new LLM from days to hours. After validation, the proven model is “on-boarded” to the on-premises platform for production inference. For lean teams in regulated sectors like finance or government, this model provides a fast, low-risk path to operationalizing AI without compromising compliance.

One example: Incorta used aiXplain to prototype multiple LLMs in the cloud, validate performance and compliance, and deploy the selected model on-premises, all within a week, with zero data egress.

Avoiding Vendor Lock-In

Vendor lock-in—relying on a single cloud or AI provider—can limit flexibility and drive up long-term costs. Enterprises need a way to explore and adopt AI technologies while staying in control of their choices and infrastructure.

Governments and large banks are wary of becoming dependent on any single AI vendor. Recent federal policy explicitly calls for knowledge transfer, model portability, and pricing transparency to avoid lock-in.

aiXplain’s hybrid AI architectures facilitate multi-cloud or on-premises strategies, enabling organizations to switch providers or adopt open-source models without major rework. For instance, a bank could develop an AI solution using a variety of cloud APIs and LLMs, then containerize the winning approach and run it on local servers or within an in-house Kubernetes cluster. Because aiXplain On-Edge is Kubernetes-compatible, the bank can re-deploy the same AI solution across cloud (e.g., AWS, Azure, Google Cloud) and other on-premises environments with ease. This supports portability, scalability, and consistency, which are essential for modern IT teams.

According to a hybrid cloud framework shared by Pluralsight, regulated workloads (those with high data sensitivity) should be run on-premisesises or in a hybrid configuration, while only low-sensitivity workloads are safe in public cloud environments.

Who It’s For

- Government agencies that handle confidential data and require total control over infrastructure.

- Financial institutions that must adhere to regulatory compliance while remaining competitive with AI-powered innovation.

- Large enterprises with hybrid cloud strategies and stringent security policies.

Wrapping Up: The Future of Hybrid AI Deployment

aiXplain On-Edge enables a future-ready approach to enterprise AI that is secure, modular, and built for both speed and control. It brings together on-premises trust with cloud flexibility, empowering teams to build, test, and scale without compromise. In a world that demands agility without sacrificing responsibility, aiXplain On-Edge offers enterprises a clear path forward.

In an era where trust, transparency, and agility matter more than ever, aiXplain On-Edge gives enterprises a practical and powerful way to lead.

We have cookies!

We have cookies!