Do You Really Understand AI Agents?

Understanding AI Agents: A Developer’s Perspective

When I first stepped onto the AI agents hype train, I was completely clueless about why everyone was talking so much about these agents. Coming from an AI and computer science background, I understood technical concepts ranging from object-oriented programming to large language models (LLMs). The first time I read about what an AI agent does, it seemed like a simple Python script where I’d create classes with methods and have one class (the agent) call a method from another class (the tool). Then came function calling, and my brain felt even more scrambled. I wondered what was so different about function calling because, as the name suggests, it literally means calling a function (or method) from another.

To better understand agents, I found it helpful to expand on the analogy of classes and functions. Think of an AI agent as a class and its methods as the functions it can call. The agent works through a flow of reason → act → observe → respond:

- Reason: The agent first reasons about the problem, breaking it into smaller, manageable tasks.

- Act: It then acts on these tasks by calling the appropriate functions or tools to solve them.

- Observe: Next, the agent consumes the outputs of these functions as observations.

- Respond: Finally, it combines the observations to provide a coherent and informed response.

This structured reasoning cycle was a revelation to me—it showed how agents aren’t just passively calling functions but actively reasoning and deciding the best course of action for each task. Unlike traditional LLM usage, where a single response or function call might suffice, agents orchestrate multiple steps to achieve more complex and dynamic goals.

This got me thinking about how agents compare to traditional task schedulers like cron jobs. Cron jobs can decide when to do something based on user-defined triggers, like running a script at a specific time every day. However, they lack the ability to decide how to handle complex tasks or adapt to dynamic inputs. For instance, a cron job might send an email reminder daily, but it wouldn’t know how to tailor the email content based on the recipient’s changing preferences. Agents bridge this gap by combining timing (like cron jobs) with reasoning, action, and adaptability to execute more sophisticated workflows.

Naturally, this raised a question in my mind: How does this reasoning manifest in practice, particularly with tools like function calling?

Function calling

Then I went down the rabbit hole of understanding function calling through online blogs and discussions with engineers. One key realization was that an LLM doesn’t automatically know which function to call—it has to be prompted correctly. By carefully crafting the prompt, we guide the LLM to identify the task at hand and determine which function best suits the need. For example, if the input prompt asks for weather information, the LLM interprets the intent and matches it to a weather API function, provided this function is pre-defined and accessible.

Once I grasped this, I discovered that function calling with LLMs can generate structured responses, like JSON objects, which enhance the interactivity and utility of AI applications with external tools and APIs. Did you understand that? Yes? No? Maybe? Well, I am going to assume you need an example for a better understanding because I know I needed one.

Let’s consider the command-line utility curl. When you use curl, you invoke a specific functionality to perform tasks like sending HTTP requests to a server. For instance:

curl -X GET "https://api.example.com/weather?city=Tokyo"This command “calls” the GET function to retrieve data from the specified URL. curl accepts structured input (like a URL, headers, or query parameters) and often returns structured output, such as JSON:

{

"city": "Tokyo",

"temperature": "15°C",

"condition": "Sunny"

}While this is a straightforward example of calling a function, LLMs take this a step further. When an LLM receives a natural language query, such as “What’s the weather in Tokyo?”, it can dynamically interpret the intent, map it to a pre-defined function (like a weather API), and generate the equivalent request. Behind the scenes, this mapping might result in a curl command or a similar HTTP request executed by an intermediary software layer.

In essence, LLMs don’t replace tools like curl; they enhance their usability by acting as an intelligent intermediary, translating vague user queries into precise, executable instructions. This capability makes LLMs invaluable for creating dynamic, user-friendly systems capable of adapting to complex workflows.

Understanding function calling brought me closer to grasping the mechanics of AI agents, but it left me wondering: Where did the idea of ‘agents’ come from? Are they just tools for executing tasks, or is there more to their story? To answer this, I took a deep dive into their origins and evolution.

Origins of AI Agents: From Philosophy to AI

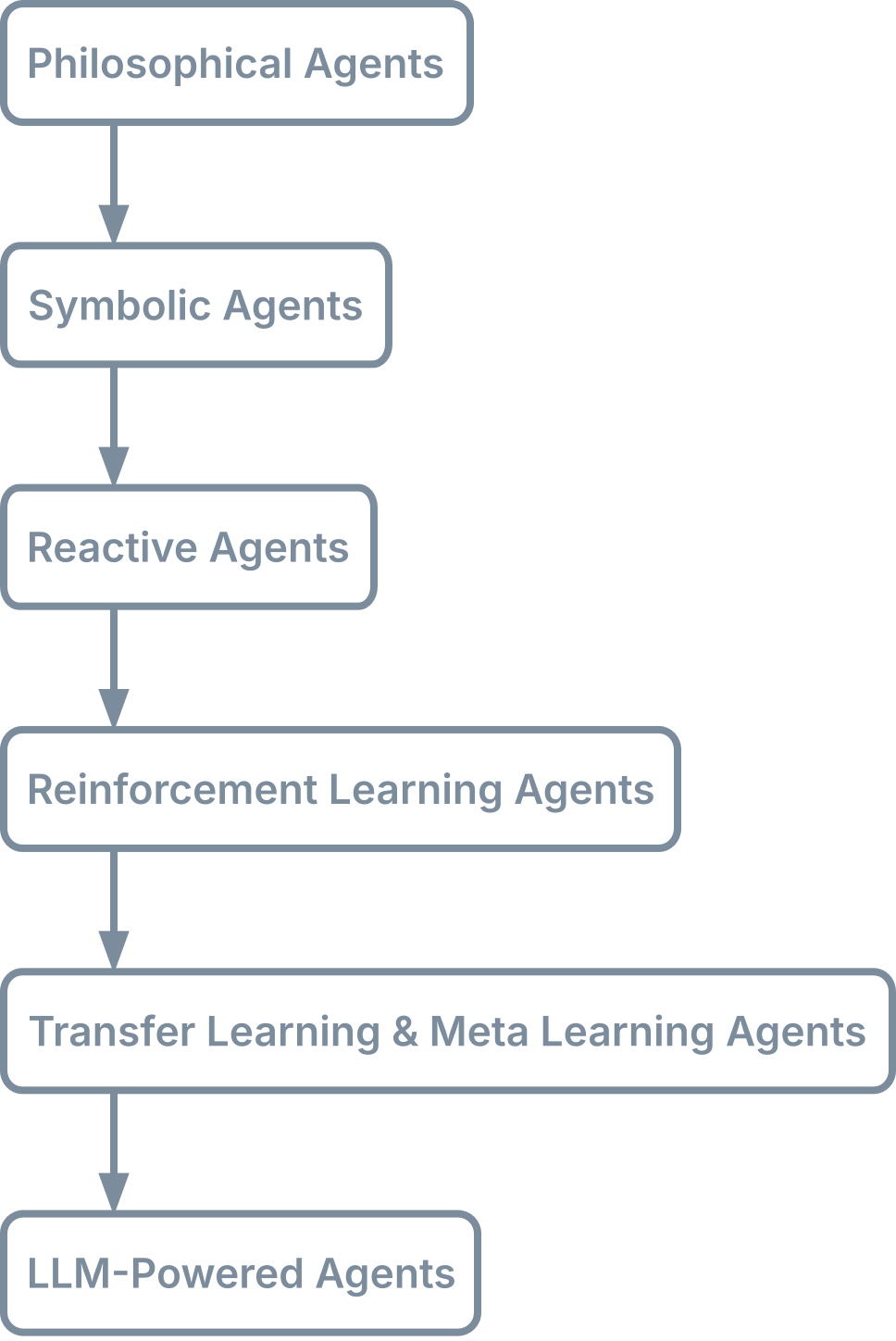

The concept of agents isn’t new—it dates back to philosophy, where agents were seen as entities capable of acting on behalf of others with autonomy. Fast forward to the world of artificial intelligence, and these philosophical roots have evolved into computational models. According to the survey paper, the early AI agents were rule-based systems designed to perform specific tasks. These were a far cry from today’s adaptive, LLM-powered agents. The evolution looked like as shown in the following diagram:

Understanding this evolution helped me see how modern agents embody the progress made over decades of AI development. In the next blog, I’ll demonstrate this by building the BaristaAgent—a practical example of how far AI agents have come.

LLMs: The catalyst for modern AI agents

Large Language Models (LLMs) became a turning point for AI agents. Unlike earlier agents, which relied on hardcoded rules or domain-specific training, LLMs enabled agents to interpret and respond in natural language, making them more versatile and interactive. Tools like function calling allow these agents to interact with APIs, databases, and other systems, creating a bridge between understanding and action.

Conclusion

The leap from early rule-based systems to today’s LLM-powered agents isn’t just about technological evolution—it’s about making advanced AI accessible. What once required painstaking expertise and custom coding can now be achieved with modern frameworks that simplify every step of the process.

If you’re ready to explore the possibilities, aiXplain’s Agentic Framework offers a seamless way to design, build, and deploy AI agents. For those who want to dive deeper, aiXplain SDK equips you with all the tools you need to get started.

In part 2, we’ll shift from theory to practice, exploring how easy it is to build your own agent and bring abstract ideas to life. Curious about what that looks like? You’ll find out soon!

We have cookies!

We have cookies!