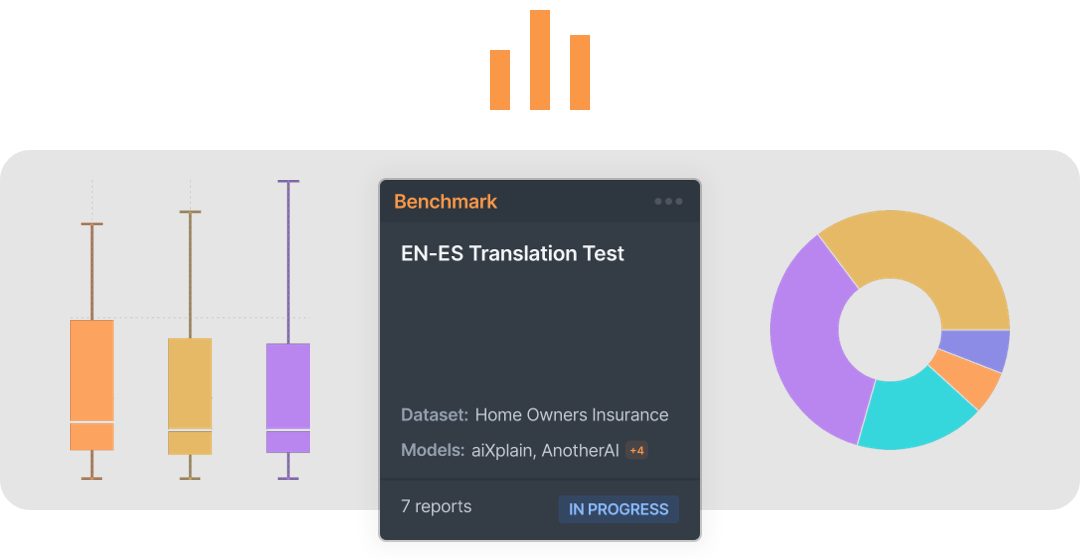

Benchmark provides an easy and fast way to evaluate the performance of AI systems based on user-defined performance metrics. Start a job within seconds and create an accurate, comprehensive, and interactive report that allows you to diagnose and compare AI systems continuously and efficiently.

How Benchmark works in 4 simple steps

- Configure

- Run

- Analyze

- Iterate

Populate your evaluation dataset, models, metrics, and other configurations to prepare your job.

Start the Benchmark job with a click of a button.

Diagnose the performance of the models through the Benchmark report generated.

Rerun Benchmark jobs to monitor model changes or create a new job to evaluate models with different metrics and datasets.

Supported functions in Benchmark

Benefits of Benchmark

Create a Benchmark job in a single step with our no-code user-friendly interface.

You don’t have to wait until the Benchmark job is complete to view the report. You can check the report at any time.

Obtain easy-to-interpret granular insights on the performance of models for quality, latency, footprint, cost, and bias with interactive Benchmark reports.

Benchmark offers the most cost-optimized evaluation tool in the market with no required subscription. Pay for each report generated individually with no commitment.

Reports are equipped with advanced filtering options to provide better insights for your models.

Generate derivative datasets based on your specified filters to analyze the data further.

We have cookies!

We have cookies!