Meet Evolver: aiXplain’s Meta-Agent That Makes AI Self-Improving

What if your AI agents could continuously refine their performance and reasoning—automatically—without you writing a single line of code?

We’re excited to introduce Evolver, a breakthrough meta-agent that brings evolutionary optimization to AI systems. While researchers focus on training foundation models with billions of parameters, we asked a different question: What if the agents built on top of those models could learn to evolve their own instructions and architectures?

The answer redefines how we build, debug, and deploy intelligent systems.

The Problem: Building AI Agents Is Easy, Keeping Them Great Is Hard

You’ve spent hours crafting the perfect agent prompt. Your multi-agent system has elegant tasks and dependencies. Everything works beautifully… until it doesn’t.

- Your research agent starts missing key information

- Your writing agent’s output becomes repetitive

- Your customer service bot can’t handle edge cases

- Your team agent duplicates work or skips critical steps

Now what? You’re stuck in the prompt engineering treadmill:

- Read through outputs to identify issues

- Guess what instruction changes might help

- Test manually

- Repeat 50 times

- Hope you didn’t break something that was working

There’s a better way.

Self-Improving Agents

Evolver introduces a radical idea: treat agent optimization as a search problem, where AI guides the search.

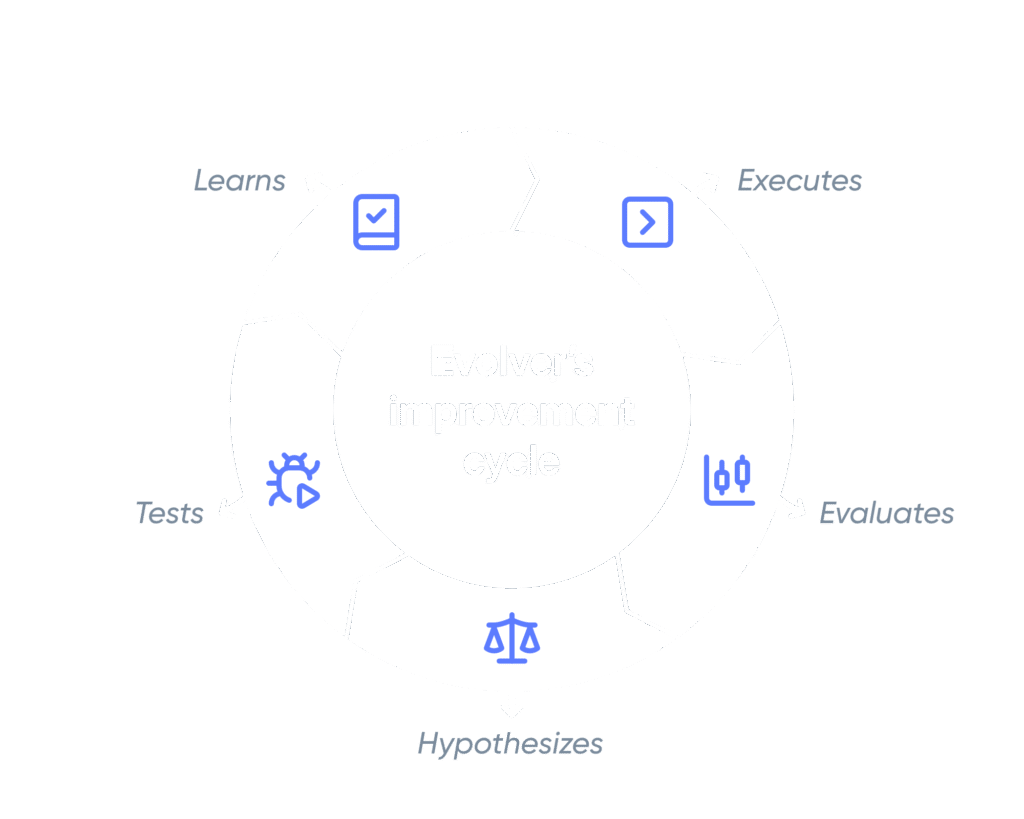

Instead of you manually iterating on prompts, Evolver:

- Executes your agent on real tasks

- Evaluates the output quality against criteria

- Hypothesizes what changes might improve performance

- Tests multiple variations in parallel

- Learns from what works and what doesn’t

- Repeats until it finds significantly better versions

Think of it as AI-guided A/B testing on steroids, running dozens of experiments while you grab coffee.

This autonomous optimization approach is formalized in our research paper by Kamer Ali Yuksel, which details the iterative refinement process, LLM-driven feedback loops, and performance benchmarks across multiple domains.

Three Innovations That Make It Work

1. LLMs as both scientist and judge

The strength of Evolver lies in how it uses language models in two distinct roles:

As the Scientist: The LLM analyzes evaluation reports and proposes intelligent modifications:

"The output lacks specific examples. Hypothesis: Adding 'Provide concrete real-world examples for each point' will improve clarity and engagement."As the Judge: The same LLM evaluates outputs using structured criteria:

Clarity: 4/5 → 4.5/5 ✓

Depth: 3/5 → 4/5 ✓

Engagement: 3.5/5 → 4/5 ✓

CONCLUSION: NEW OUTPUT IS BETTER OVERALLThis creates a self-supervised optimization loop where the AI proposes, tests, and validates its own improvements.

2. Parallel exploration with memory

Most prompt engineering is sequential: try one thing, then another. Evolver runs 10+ variations simultaneously, testing diverse hypotheses in parallel:

- Variant 1:

"Think step-by-step before answering" - Variant 2:

"Provide specific examples from the last 5 years" - Variant 3:

"Structure your response with clear headers" - …and 7 more, all running at once

But here’s the key part: it remembers failures. When a modification doesn’t improve performance, Evolver records it in an archive for future reference.

Future iterations avoid these dead ends, preventing the system from getting stuck trying variations of the same failed approach.

3. Evolving criteria, not just agents

The most sophisticated touch: the evaluation criteria themselves evolve as the agent improves.

Initially: "Output should be clear and relevant"

"Output should provide data-backed insights with specific examples from the last 3 years, structured in sections with actionable recommendations, and cite at least 3 authoritative sources."The system learns what “good” means for your specific use case, becoming more discerning as your agent becomes more capable.

Two Flavors of Evolution: Choose Your Strategy

Instruction tuning: Optimize agent prompts

Transforms generic agent instructions into sophisticated, specialized guidance through automated testing. Your “write blog posts” agent discovers exactly how to structure content, use examples, and strike the right tone—without you guessing. Impact: better output quality in minutes.

Team tuning: Orchestrate multi-agent systems

Optimizes team agents—restructuring subagents, refining tasks and dependencies to eliminate redundancy. Vague responsibilities evolve into specialized functions with precise task scoping and logical dependencies. Impact: better task decomposition, faster execution.

Use Case: The Self-Improving Customer Service Bot

Imagine a customer service system that:

Week 1: Handles basic queries about shipping and returns

Agent instructions: "Help customers with orders"

Performance: 3.5/5Week 4: Customers start asking about a new loyalty program

Auto-evolved instructions: "Help with orders. For loyalty program

questions, explain points system and referral bonuses"

Performance: 4.1/5Week 8: Holiday season brings surge in gift-related questions

Auto-evolved instructions: Now includes gift wrapping options,

gift message formatting, and delivery timing for holidays

Performance: 4.4/5The agent learns from real conversations and adapts to changing user needs without engineering intervention.

Other user cases

- Adaptive research assistants: Research agent optimized for AI trends automatically adapts when users pivot to blockchain questions. Continuously retunes itself for actual user needs overnight—no redeployment required.

- Multi-agent workflow optimization: Three-agent research team with redundant work and unclear roles. Evolver restructures responsibilities and dependencies, e.g., 40% faster execution, 25% better coherence.

- Security-critical code review: Code review agent catches bugs but misses vulnerabilities. Ecolver adds explicit security checks (SQL injection, XSS, authentication), e.g., improving coverage from 3/5 to 4.8/5.

- Multi-segment personalization: One support bot automatically evolves into specialized variants for enterprise vs. individual customers, each optimized for its audience’s communication preferences.

The Implications: A New Paradigm for Agent Development

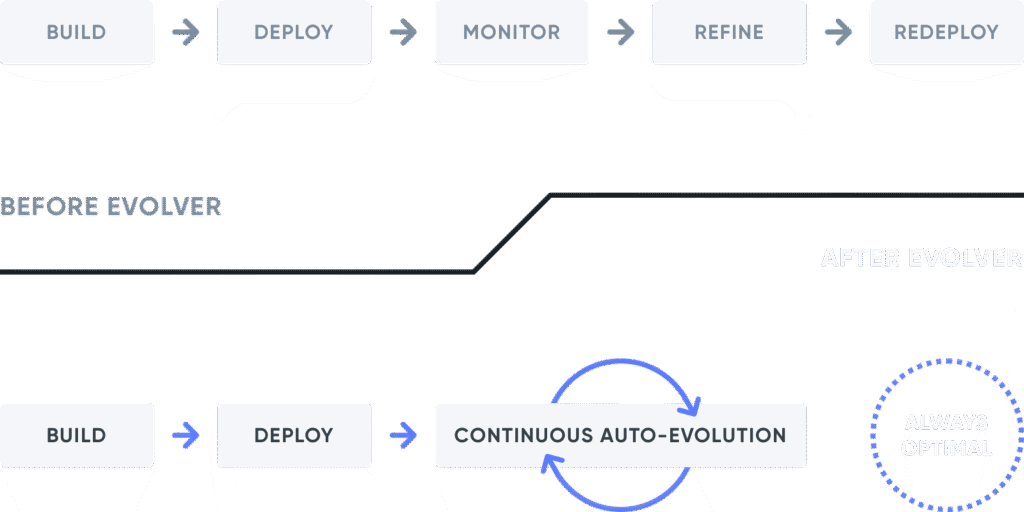

Before, agents were static systems. Drift happens, performance degrades, and fixes rely on human intervention.

After Evolver, agents adapt to new patterns, learn from outcomes, and self-improve continuously without redeployment.

AI shifts from manual maintenance to autonomous evolution, staying optimized as the world around it changes

What this enables

- Rapid prototyping: Go from idea to optimized agent in an afternoon, not a sprint.

- Non-expert agent building: Product managers can define what they want (criteria), and Evolver figures out how.

- Automatic A/B testing: Deploy multiple evolving variants and automatically converge on the best.

- Self-healing systems: Agents detect performance degradation and auto-correct.

- Personalization at scale: One agent, thousands of evolved variants tailored to different user segments.

The Vision: Agents That Never Stop Getting Better

Imagine a future where:

- Your code review agent evolves as your codebase grows, learning project-specific patterns

- Your data analyst agent adapts to new schema changes without manual updates

- Your customer service team collectively learns from every interaction, sharing improvements across all instances

- Your research assistant tracks emerging topics and automatically expands its expertise

All of this happens within a controlled, auditable process—nothing evolves without human-in-the-loop approval. Every change is benchmarked, fine-tuned, and documented through a comprehensive evaluation report. Evolver brings the rigor of model benchmarking and the transparency of open review to agent improvement.

This isn’t science fiction. It’s the next layer of intelligence in production—self-improving, transparent, and always under your control.

The Bigger Picture: aiXplain’s Agentic Infrastructure

aiXplain’s vision is to build an agent-based infrastructure—a world where agents don’t just assist, but run the show.

The first layer of this infrastructure is powered by micro-agents like the Mentalist, Orchestrator, Bodyguard, and Inspector. Together, they act as the AgentOps team: decomposing complex goals, routing tasks, coordinating execution, securing interactions, and monitoring behavior in real time. They enable agents to operate safely, adaptively, and autonomously at runtime.

The second layer introduces meta-agents like Evolver. These oversee the agents themselves—providing external intelligence for design, debugging, evaluation, and continuous self-improvement. Evolver extends agent autonomy beyond execution into self-governance and growth.

This layered design—micro-agents for operation, meta-agents for evolution—forms the foundation of aiXplain’s agentic infrastructure: scalable, trustworthy, and built for a future where intelligent systems manage themselves with human oversight.

Getting Started

Evolver is designed for real-world use:

response = agent.evolve(

evolve_type=EvolveType.TEAM_TUNING,

max_successful_generations=2,

max_failed_generation_retries=1,

max_iterations=50,

max_non_improving_generations=3,

)

evolved_agent = response.data["evolved_agent"]

print(evolved_agent)

print(response.data["evaluation_report"])Configure evolution for your needs:

- Quick iteration: 2-3 generations, 15-30 minutes

- Deep optimization: 10+ generations, 2-3 hours

- Production tuning: Continuous evolution on real traffic

Explore the documentation to learn how to activate Evolver, review evaluations, and guide your agents’ evolution responsibly.

Why This Matters

Evolver is the first meta AI agent that makes AI agents truly autonomous—not just in execution, but in self-improvement.

We’re not replacing prompt engineers. We’re giving them superpowers. Instead of manually testing hundreds of variations, you define what “good” looks like, and Evolver finds the path there.

The era of static agents is over. Welcome to the era of evolution.

We’re Just Getting Started

On the roadmap:

- Multi-objective optimization: Balance speed, quality, and cost simultaneously

- Transfer learning: Apply lessons from one agent to accelerate evolution of others

- Evolutionary ensembles: Maintain diverse variants for different contexts

- Human-in-the-loop evolution: Incorporate user feedback into the optimization loop

The potential is enormous. Agents that evolve. Systems that heal themselves. AI that gets better at being AI.

Watch Evolver in action

The future isn’t just intelligent agents. It’s agents that get more intelligent every day.

Want to try Evolver and see your agents self-evolve? Talk to our team

We have cookies!

We have cookies!